Why do I need efficiency when I have a great GPU?

Published:

I got the idea of this blog post when a friend of mine shared an answer on Quora. In this answer, Miguel Oliveira explains how the Machine Learning algorithm performed effectively, that is, took less time on increasing training batch size just because of changing to a more efficient data structure for the problem. This concept is usually overlooked by many people in the industries, making them wondering if they should actually care about efficient algrithms.

In major areas, including research, Deep Learning is used as a black box, without actually realizing the implications of optimization the uderlying algorithm for the network architecture. Forget that, some people do not even understand the network architecture. I came across a blog post of Adit Deshpande few years ago that lists and discusses about the 9 Deep Learning Papers you need to know. It discusses the 9 Deep Learning papers that lead to a strong foundation of the current researches and sucesses of Deep Neural Networks, and I find that algorithm optimization or using a better data structure strikingly improves the performance manifolds.

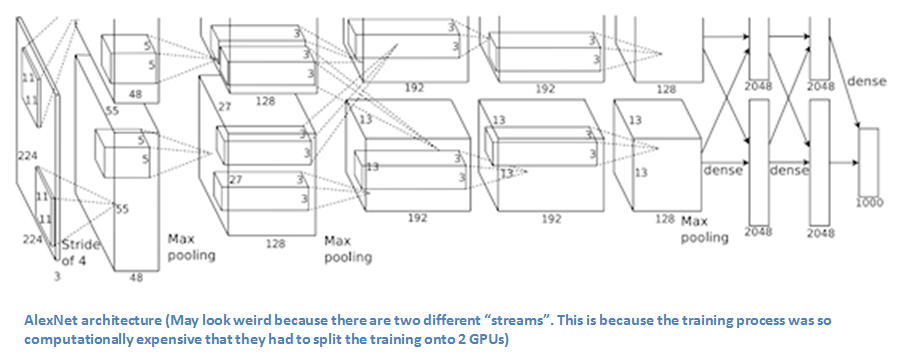

If we look into each of the recent breakthrough papers, most of the papers talk about optimization of algorithm(s) that lead to the efficient performance of the neural networks. The first paper, AlexNet introduces parallel computations, along with a result on a very large number of data, ImageNet. The Accuracy Score obtained was significantly high because of the use of parallel computation. The onset of Deep Neural Networks was also obvious with this work, because the results were achievable sooner than before due to the fast processing because of parallel processing, along with better computation facilities, obviously.

Although the increase in the computation power saw a linear growth, the efficiency of the data structures and algorithms underlying the network architectures saw an exponential growth. This led to a very rapid development of state-off-the-art Deep Learning algorithms in many areas. Although further advances in classification networks saw the bright light only because of parameter update and efficient visualization strategies or stacking of layers efficiently, as in VGGNet, GoogLeNet, Microsoft ResNet, etc. This made the underlying algorithms at the implementation level more complex.

However, in this post, I would actually like to discuss the trilogy works of Ross Girshik et. al. on Object Detection and Localization, and how the simple improvements in the optimizations of the underlying algorithms led to a significant improvements in performance.

Problem: Given an image, detect an object from the list of objects.

Basic initution - Divide and Conquer

Input image:

- Divide the image into parts by horizontal and vertical cut. (Divide)

- Consider each part. (Conquer)

- Pass the part image through a classification network and get the output score, along with the class predictions.

- Combine the part regions according to the output score and same class prediction (Combine).

- Regress the bounding box coordinates.

R-CNN

- Find region proposals (~2K). Use Selective Search.

- For each region, Pass it through a classification network and get the output score, along with the class predictions.

- Pass the region through a fully convolutional network to get the feature maps.

- Pass the output feature map to the fully connected network to get the classification results.

- Regress the bounding box coordinates.

Fast R-CNN

- Pass the input image through a fully convolutional network to get the feature map.

- Find the region proposals on the feature map using Selective Search.

- For each region on the feature map, find the classification results.

- Regress the bounding box coordinates.

Faster R-CNN

- Pass the input image through a fully convolutional network to get the feature map.

- Find the region proposals on the feature map using Region Proposal Network.

- For each region on the feature map, find the classification results.

- Regress the bounding box coordinates.

YOLO

- Resize image.

- Pass the image through a fully convolutional neural network to get a fixed size output sXsXmX(c+5).

- Flatten the last two dimensions to get an output of dimension sXsX(c+5).m

- Combine the grids to get the results.

Having a good GPU and computation power is not enough. We do not have infinite computability. Many researchers still find better, more optimal solutions, and it greatly helps.